An Important Technology Tip: Identifying AI-Created Deepfake Images

AI manipulation is becoming a major issue in our online landscape. With the surge and misuse of generative AI tools, there has been a rise in misleading images, videos, and audio, causing concern.

As AI-powered deepfakes continue to emerge frequently, featuring individuals ranging from Taylor Swift to Donald Trump, distinguishing between what is authentic and what is not is becoming increasingly challenging. User-friendly programs such as DALL-E, Midjourney, and OpenAI’s Sora allow individuals with no technical expertise to produce deepfakes simply by submitting a request and having the software generate the desired content.

These falsified images may seem innocent, but they have the potential to be utilized for fraudulent activities such as identity theft and propaganda, as well as manipulating elections.

This is a guide on how to prevent falling for deepfake tricks.

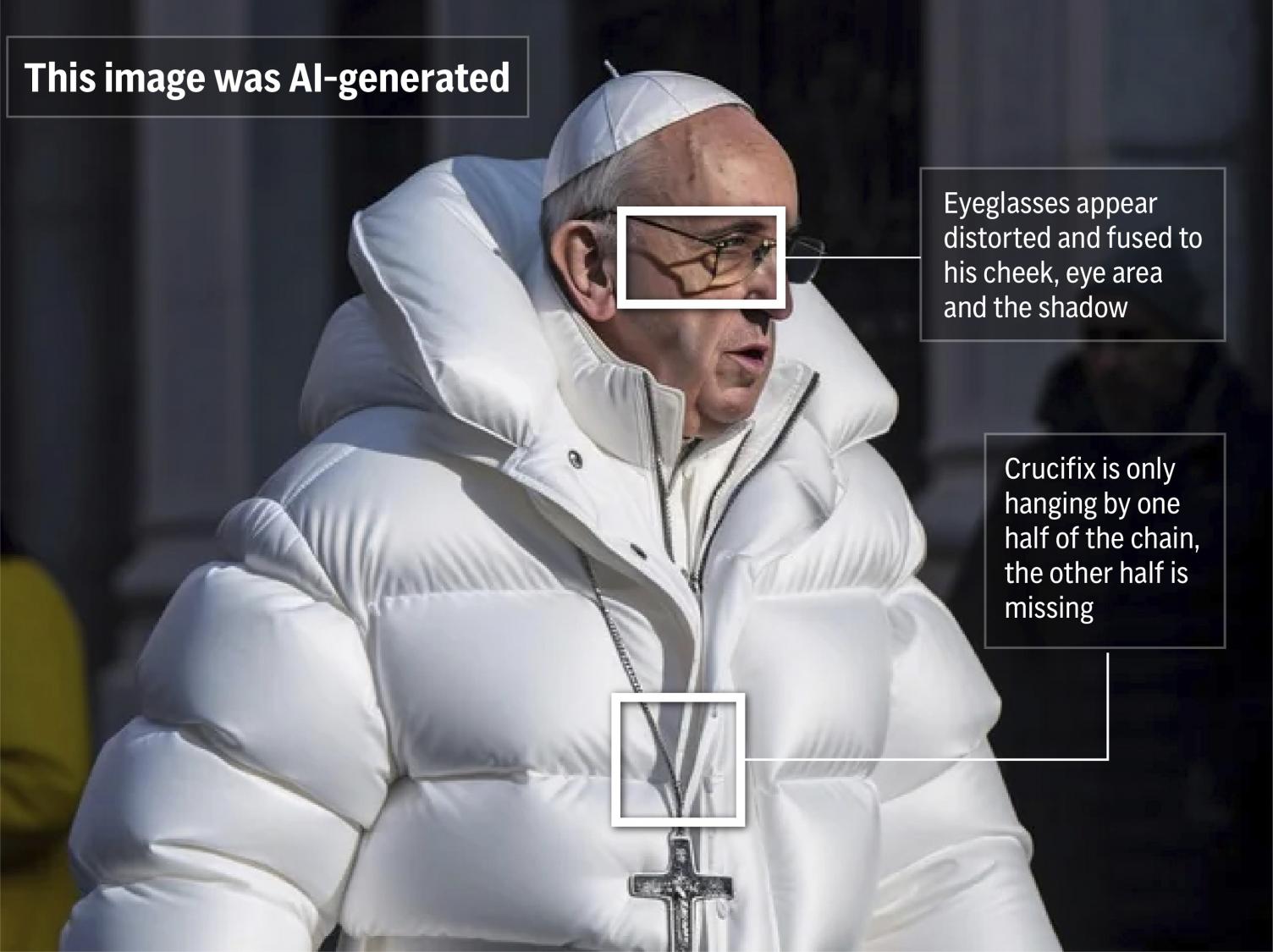

In the past, deepfake technology was not flawless and would often reveal signs of tampering. Experts in verifying information have identified images containing obvious mistakes, such as hands with six fingers or glasses with uneven lenses.

However, with the advancement of AI technology, tasks have become significantly more challenging. According to Henry Ajder, the founder of the consulting firm Latent Space Advisory and an esteemed authority in generative AI, commonly offered suggestions, like detecting abnormal blinking behavior in subjects of deepfake videos, are no longer as effective.

“However, there are certain factors to keep an eye out for,” he stated.

According to Ajder, many AI-generated images, specifically of individuals, have a digital finish that gives skin a highly refined appearance, described as a “smooth” aesthetic effect.

He cautioned that using creative prompting may eradicate signs of AI manipulation, among other things.

Ensure that the shadows and lighting are consistent. Although the main subject may be well-defined and realistically portrayed, the background elements may not be as natural or refined.

One of the most popular techniques used in deepfakes is face-swapping. It is recommended by experts to carefully examine the edges of the face, including whether the skin tone matches the head or body and if the edges are clear or distorted.

If you have doubts about the authenticity of a video featuring someone speaking, observe their mouth. Do the movements of their lips perfectly align with the audio?

Ajder recommends examining the teeth. Are they distinct, or is there a distortion that does not match their appearance in person?

According to Norton, algorithms may not yet possess the complexity required to produce unique dental features, thus the absence of distinct outlines for each tooth could serve as a hint.

The context can be important. Pause and think about the credibility of what you are witnessing.

The Poynter website for journalism suggests that noticing a public figure behaving in a manner that appears “overstated, implausible, or out of character” may indicate the presence of a deepfake.

Is it possible that the pope would actually wear a high-end puffer jacket, like the one shown in a well-known fake photo? If so, wouldn’t reputable sources have also shared more pictures or videos?

An alternate method involves utilizing artificial intelligence to combat artificial intelligence.

Microsoft has created an authenticator tool that can assess photos or videos and provide a confidence rating for any potential manipulation. The technology known as FakeCatcher, developed by Intel, utilizes algorithms to analyze an image’s pixels in order to determine its authenticity.

There are online tools that claim to detect counterfeit material if you submit a file or share a link to the suspicious content. However, certain tools, such as Microsoft’s authenticator, are only accessible to specific partners and not the general public. This is due to concerns from researchers about alerting malicious individuals and giving them an advantage in the ongoing competition to create more convincing deepfakes.

Having access to detection tools may lead individuals to believe that they possess godlike abilities that can replace critical thinking, but according to Ajder, it is important to recognize their limitations.

With that being stated, the development of artificial intelligence has been moving rapidly and AI algorithms are being educated using online data to generate better quality content with less errors.

This means that there is no assurance that this guidance will remain applicable even after a year.

According to experts, expecting regular individuals to become skilled at detecting deepfakes could potentially be risky. This is because it may lead them to falsely believe they have the ability to identify these manipulated videos, when in reality it is becoming more challenging even for trained professionals to do so.

___

Reported from New York was Swenson.

___

Various private foundations aid in funding The Associated Press in order to improve its in-depth reporting on elections and democracy. More information on AP’s democracy initiative can be found here. The AP holds full responsibility for all of its content.

Source: wral.com